Here’s an article how restic splits data:

Thanks and for the explanations, you’re right: the block size in the examples is too small for restic to efficiently deduplicate. In the 100 x 1MB test, why was the last file the only one to receive deduplication?.Is there any way of getting these numbers closer to 100%?.All the other 99 files were (1024.000 KiB added). This was the last of the 100 files (since the first was named 00), and coincided with the last 1024KiB of the combined file. Interestingly, only one file got any deduplication: new /test/99, saved in 0.025s (751.531 KiB added) This was the output: Files: 100 new, 0 changed, 0 unmodified This time I first added the combined file, then the 100 files. I re-did the above test with 100 x 1MB files.

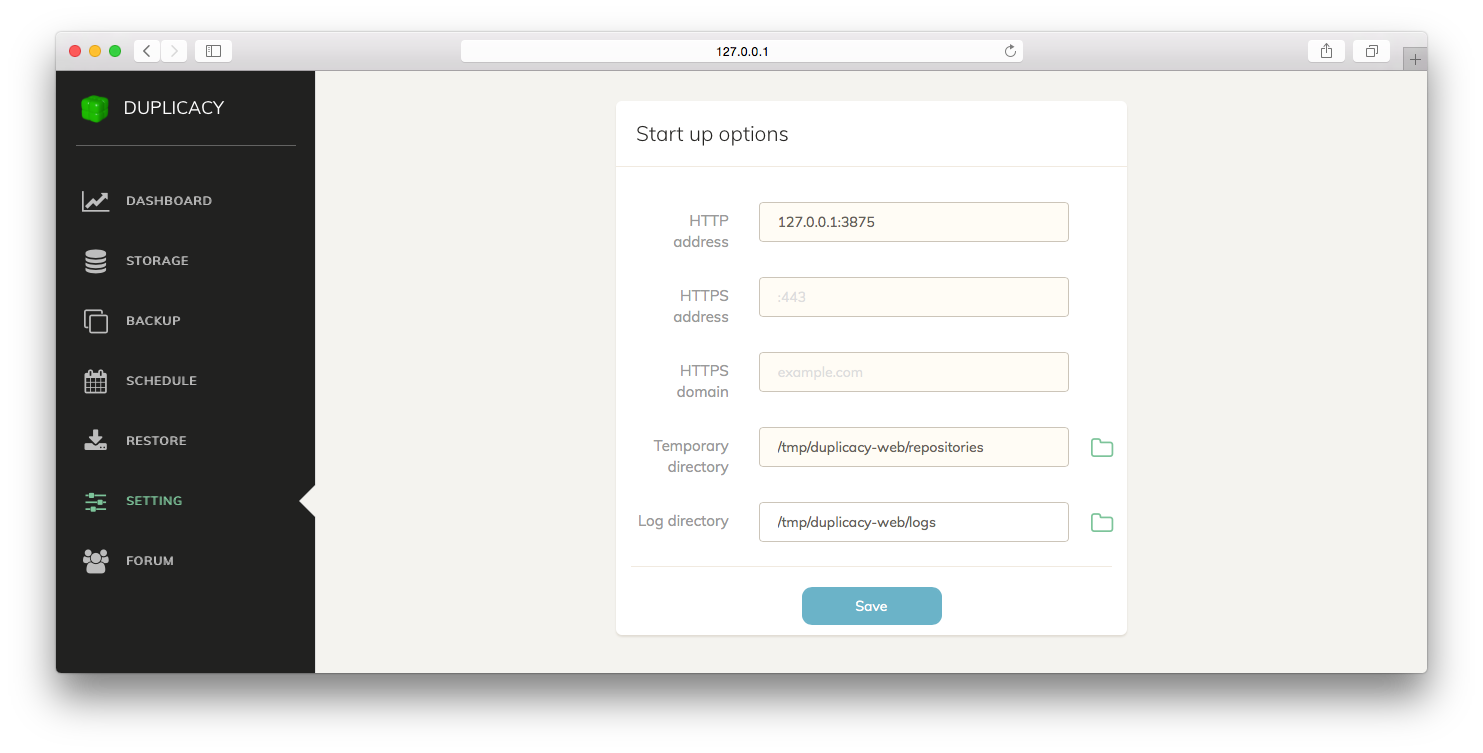

DUPLICACY VS DUPLICITY PASSWORD

Repository 86b7fabe opened successfully, password is correct The output of the combined run was: % RESTIC_PASSWORD=X restic -verbose=4 backup -tag=test combined 1m16s | 19-07-30 16:47:59 I did a backup on the individual files, then on the combined file. Then I combine those files into a single 128MB file: cat ? > combined I generated 128MB of data, in 16 x 8MB files: for i in do dd if=/dev/urandom of=$i bs=1M count=8 done I’m testing out the deduplication of restic.

0 kommentar(er)

0 kommentar(er)